Continuous and Extended Financial Planning Software for Your Enterprise

"*" indicates required fields

Do You Regularly Struggle with These Financial Planning Challenges?

Siloed and Inaccurate Data

Outdated Plans That Are Disconnected from Reality

Inability to Adapt to Quickly Shifting Markets

Trusted By

Integrate and extend your planning processes for more connected, proactive, and empowered teams.

Put Your Business First

Configure Tidemark according to your business processes so you don’t need to conform to inflexible software, cubes, or spreadsheets.

Make Finance a Participation Sport

Make it simple for everyone to get information, answer questions, collaborate, and participate in the decision-making process.

Run in the Now, Impact the Future

Run your most complicated scenarios and calculations in real time with a fast computational engine and rich data integration.

Key Features of Our Top-Rated Financial Planning Software

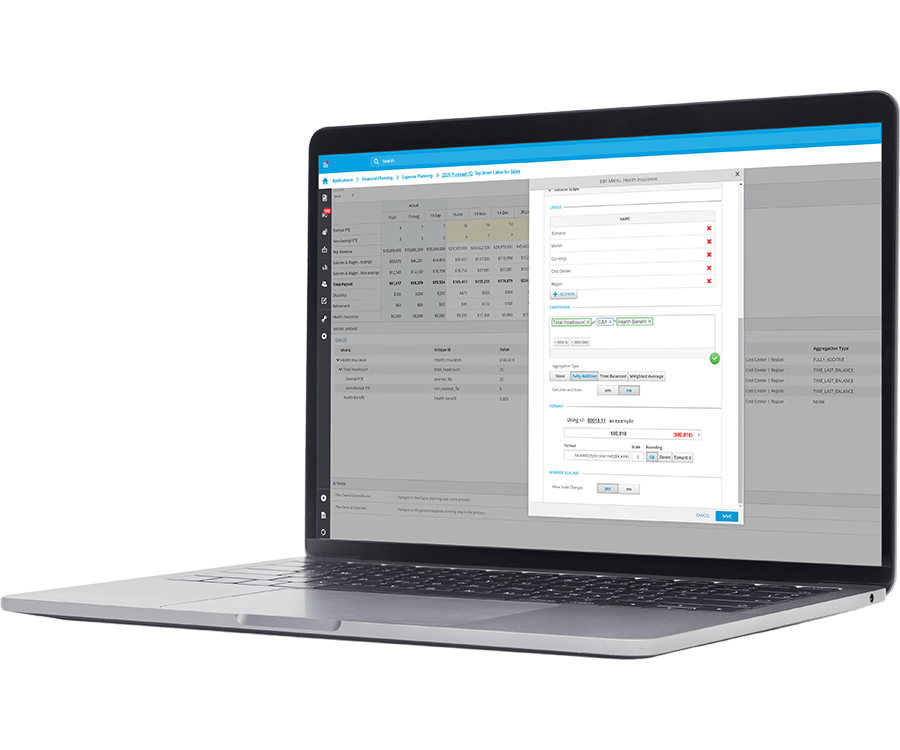

Dynamic Modeling

Model faster and more accurately

- Single model with N-dimensions

- Computational modeling without code

- Compare modeling what-if scenarios

- Item-level planning

- Agility to change on the fly

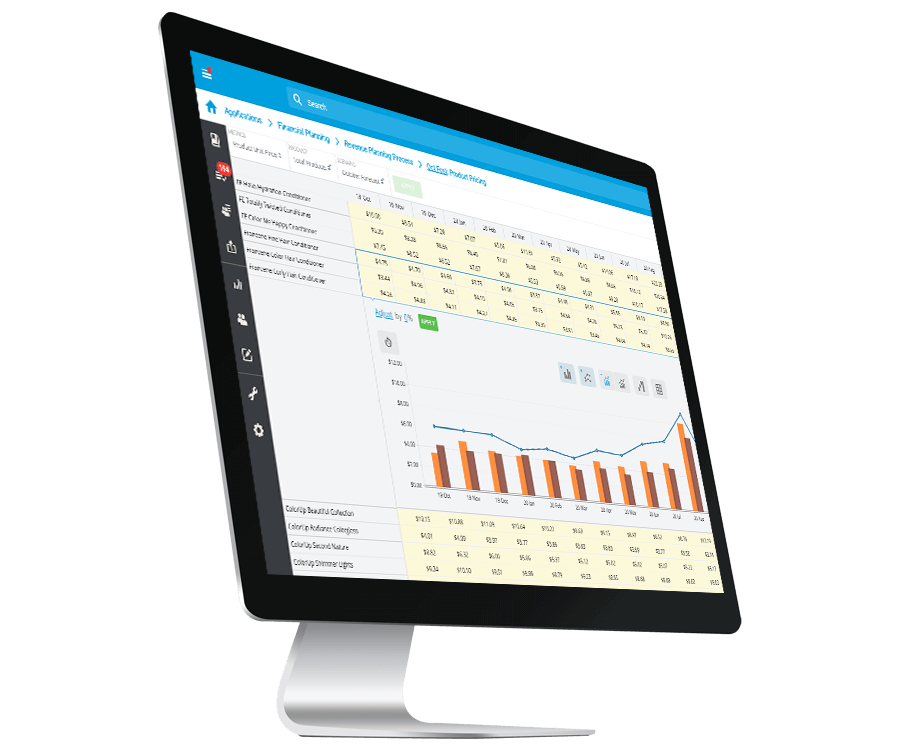

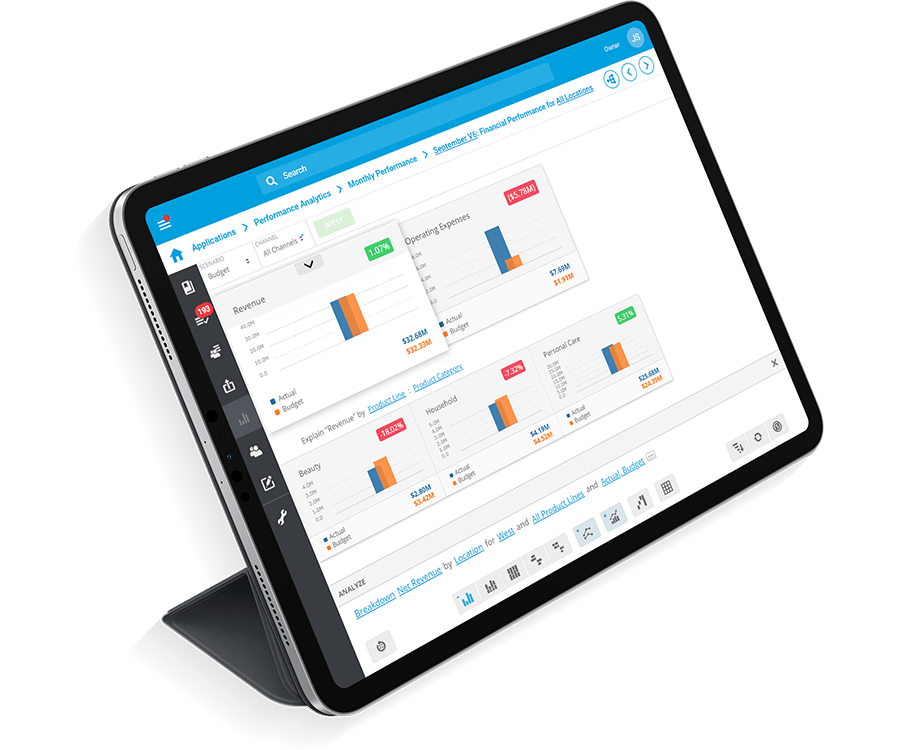

In-Line Analytics

Analyze data and uncover contextual insights within your planning processes

- Conduct variance analysis with collaborative commentary capabilities

- Drill down to operational details behind financial metrics

- Analyze data across intersecting hierarchies without reconfiguration

- Create visual narratives to communicate business performance

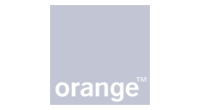

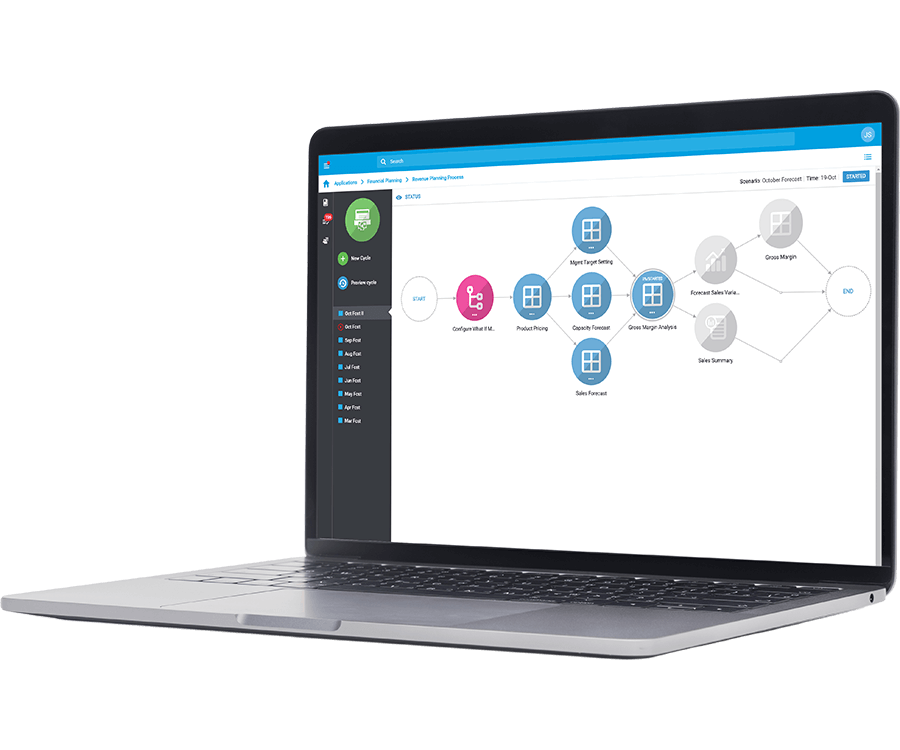

Workflow Management

Align the team while shortening your planning and budgeting cycles

- Easily map your processes with configurable approval gates

- Collaborate with your team without leaving the context of your planning process

- Automate your key planning tasks to minimize errors and speed up the process

Scalable Architecture

Secure, scalable, and elastic infrastructure

- Leverage our multi-tenant, elastic cloud architecture that scales as you grow

- Connect to your data and reports on any mobile device

- Rest easy, knowing your data is safe with our secure and trusted infrastructure

Tidemark Earns Top Rankings in BARC’s The Planning Survey 20

Download your free copy of The Planning Survey 20 and discover why Tidemark earned four top rankings and nine leading positions in the latest version of the world’s largest and most comprehensive independent survey of planning and budgeting software.

Over 20 pages of detailed analysis and commentary of Tidemark covering:

- Users and use case demographics

- Support and implementation

- Planning content

- Workflow

- Driver-based planning

- Sales experience

- Competitiveness

Get your free copy of the full Tidemark report.

Tidemark empowers your corporate financial planning team to focus on what they do best.

"Tidemark gives us the functionality and flexibility to budget in the Workday data model and see the results at all levels of the organization.”

Get a Live Demo to See Tidemark in Action